Confidence Is All About The Size Of Your Sample

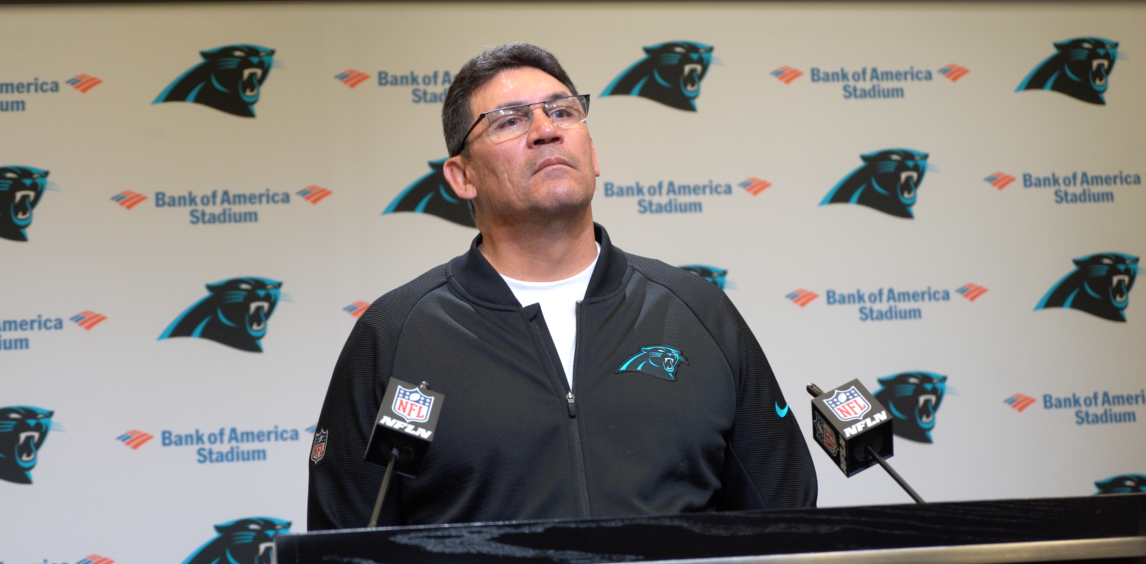

“[You get] to the point where you have to be careful because you’re going to suffer paralysis by analysis. You’re going to sit there looking at your card looking stuff up, and before you know it, the ball’s getting snapped.”

-Ron Rivera

There are two extremely important things to note when it comes to the kind of calculations discussed above – namely that all of these probabilities come with a certain degree of error or uncertainty and that these errors are multiplicative.

The reason for these errors is that the probabilities used are statistical rather than analytical – they are based on historical data rather than being innate characteristics of the sport. What this means is that the more data points you have in your sample that you are used to generate these probabilities, the smaller the error will be, but that the more specific you ask your probabilities to be, the larger the error is going to be.

An easy example of this is if you think about the chances of converting a fourth down.

There were 551 fourth down attempts in the NFL last season and so if you want the probability of converting a fourth down, you have a relatively large sample size to work with. However, if you want the odds of converting a fourth-and-4, you have a much smaller number to work with – while I didn’t go through every play-by-play from last season to find the exact number, this would be made even worse if you want the odds of converting a fourth-and-4 from the opponents 31 yard line – and even this is before we try and account for the fact that your offense is likely not completely in line with the NFL average and neither is the opponents defense.

Not every offense has one of the greatest short-yardage weapons in NFL history – and not every defense is the ’85 Bears.

Therefore, if you want to know the probability of converting a fourth-and-4 from the Seahawks 31 for the Panthers using adjustment factors to take into account the relative abilities of the offense and defense in question, you likely have room for error – error bars – that are on the same scale as your probability.

And if we go back to the example we used above, this is only part of the overall equation – we also need a probability function for every possible gain and then a set of probabilities of every single outcome from that point, each of which is going to be a number of probability functions.

What this means is that if you tried to work out the expected points of going for it on fourth down with every single knowable factor taken into account down to the wind strength and the air pressure then you could, but you would have error bars so large as to make your output absolutely meaningless.

So now we get to the more interesting question – are ‘analytics’ a completely pointless exercise?

The short and obvious answer is no, but there’s more to it than just a simple answer.

It’s just that, as with the quarterback heat map discussed earlier, you don’t just need to be smart in how you go about your calculations, you also have to be smart about what it is you try and calculate. What this means in practice is that you have to be content with asking a question that isn’t quite perfect – in order to be able to get a response that is meaningful, with the key being to ask questions where there is a sample size big enough to allow for meaningful calculations.

There are essentially two approaches this can take – one being to miss out on bits of data in order to prevent overfiltering of the sample size, and the other being to group data into similar chunks, even if those aren’t exactly precise.

For example, the first approach would probably mean that you don’t worry about taking the wind speed and air pressure into account – that maybe you ignore the possibility of the field goal getting blocked and returned for a touchdown, for example.

The second approach would be that rather than looking for the probability of making a 43-yard field goal, you look for the probability of making a field goal of between 40 and 45 yards; in reality, a sensible approach would be to mix these two tactics, and while even in the best situations you are likely to come away with error bars north of 10%, this is at least a good indicator of the relative outcome probabilities, with coaches able to “go with their gut” when the two options are essentially within the margin of error.

However, there is a lot more to analytics than just the use of historical data to create decision trees.

Quantification

“If a team ever wants its analytics department to be part of the crucial workflow within the football calendar (as opposed to a bunch of nerds with spreadsheets intermittently answering the odd tricky question), it will need at least one top-flight integrator to make it all hang together. In order to grow the department, you need to add meaningful and observable value, and finding a person who can conceive, articulate, plan and deliver this is not an insignificant challenge.”

-Pro Football Focus Founder Neil Hornsby

Now, I am not the biggest fan of Pro Football Focus, but one of the things that they have pushed from the very beginning is the idea of quantifying performance.

The idea behind this is that by putting a number on how well somebody plays, you are able to get a more impartial and macroscopic perspective that simply isn’t possible with qualitative data. You and I might disagree whether a play should be scored a 6 or a 7 out of ten for any given player – but over 400 snaps those disagreements should, in theory at least, largely even out and so the difference between a 7.7 and a 7.4 over 400 snaps should be somewhat meaningful.

The other advantage of quantifying in this way is that it allows for evaluation using these grades as an input, rather than just being the end themselves. If you want to know how left tackle play correlates with running play yardage, that is something you could calculate. These methods are still vulnerable to the issues with error and confidence described earlier, but by being able to correlate performance with outcomes, you are able to approach the idea of positional value. However, it is important to note that we are still quite a long way from this for a couple of reasons.

Here’s a look at the Carolina #Panthers’ top returning players in terms of coverage grade last season:

No. 1 – Luke Kuechly – 84.1

No. 2 – Rashaan Gaulden – 68.4

No. 3 – Eric Reid – 67.7

No. 4 – Donte Jackson – 67.2

No. 5 – James Bradberry – 64.9 pic.twitter.com/39UyfIPAvB— PFF CAR Panthers (@PFF_Panthers) June 21, 2019

The first is the lack of reliable input data – while PFF does provide grades on every play for every player in the NFL, their grading method is really too simplistic to be reliable enough for this kind of calculation, in part because their gradation method is, at least in part, still tied up with the outcomes, making it hard to even begin to claim that these data sets are independent – especially if you start factoring multiple players’ grades into a single calculation – and because by grading the play itself rather than various aspects of the play for each player, their grades fail to reflect sufficient detail.

However, by far the biggest issue is the inability to account effectively for the coaching of the two teams, as the outcome has arguably just as much to do with the playcall for both offense and defense as it is to do with the players between the lines. This is something that could be dealt with by including the offensive and defensive play into the calculation, but this would lead to extremely small sample sizes and make the data all-but-meaningless.

Because of this, it is currently almost impossible to ascribe a quantified value to any given position or player with any degree of confidence. Sure, I can say that Zeke Elliot is better than Elijah Hood without a huge number of people disagreeing with me, but it’s hard to say that with analytical data that has any more credibility than resorting to more obviously flawed but easily available stats such as yards-per-carry that don’t take context into account at all.

There is some hope in this regard – there is no reason why a team couldn’t generate their own improved version of PFF grades that broke down player performance into a number of position-specific entities, and that by asking those providing the grades to also provide an ‘confidence factor’ for each grade to better amalgamate them into usable grades.

Further to this, the development of machine learning systems – something which I don’t even begin to claim to be an expert in – offers the possibility of non-regression based analysis which should help to deal with some of the issues inherent in the way some of these things have been calculated in the past. Though there is still a significant challenge to overcome in being able to describe the playcalls in a way that doesn’t massively shrink the sample sizes while also conveying the relevant information.

So Can We ‘Moneyball’ The NFL?

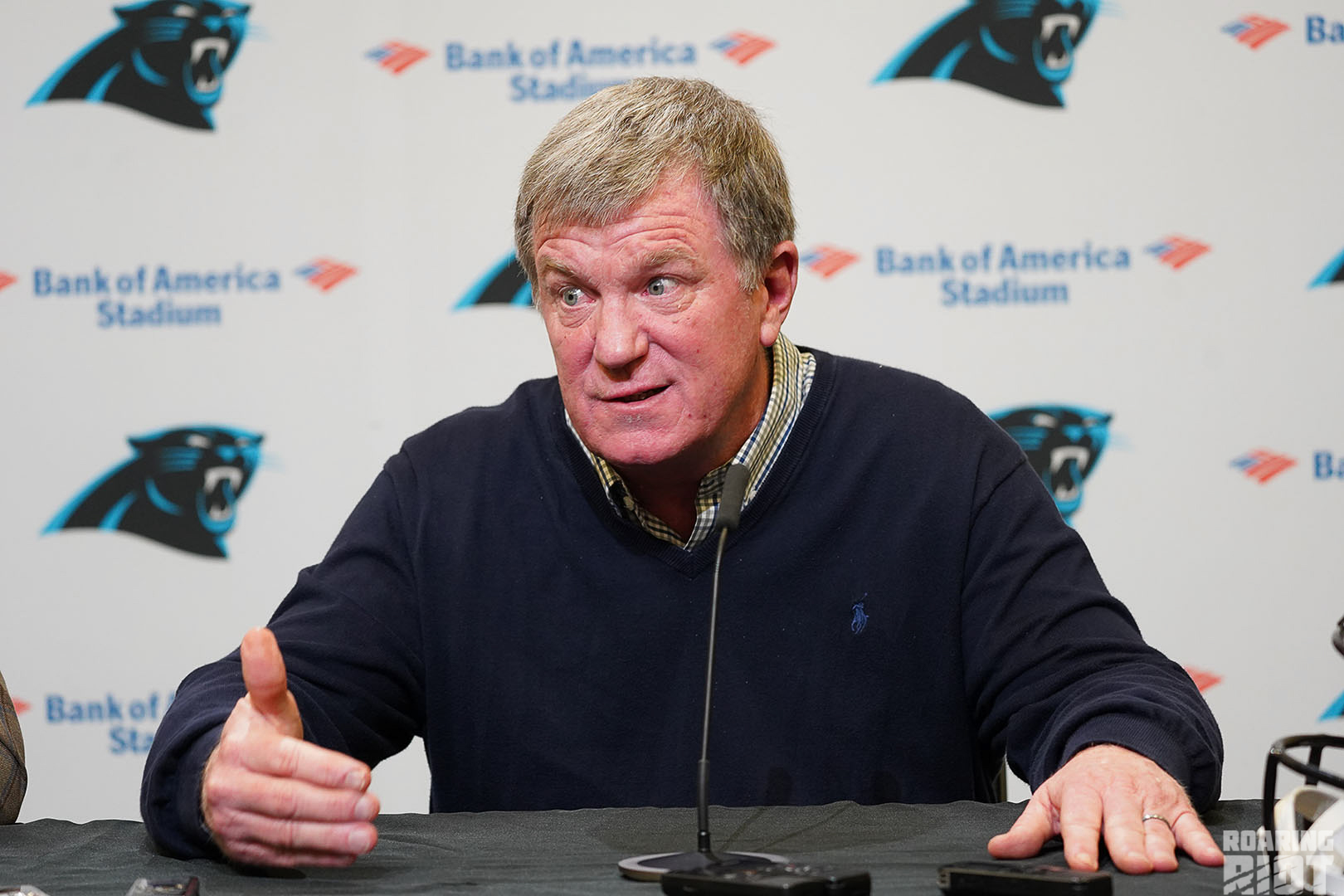

“Of course, there are teams that are not quite that sophisticated, and it is getting more and more difficult for them to compete. The data arms race is very real, and it is widening the gap between those who engage, and those who don’t.”

-Pro Football Focus majority owner Cris Collinsworth

The short answer is no.

The MLB plays over ten times more games per season – and so has much larger samples sizes to deal with – and from an outcomes point of view, the absence of a ‘yardage’ aspect makes things an awful lot simpler, as does the lack of a play clock. A baseball at-bat is almost perfectly designed for quantification, and while things like player ability adjustments and situational adjustments such as weather and fatigue are not really feasible in a rigorous mathematical sense, the game is essentially described by a number of discrete outcomes – that is not the case for the NFL.

An easy way of putting this into perspective is the following; there are twelve different pitch counts possible in baseball, from 0-0 to 3-2, while in the NFL, even if you ignore the possibility of penalties, goal-to-go and negative plays, there are still 28 different down-and-distance options in the NFL, and that is before you even get to the idea of field position which has 98 different, vaguely-discrete options. Not to mention different playing surfaces, weather conditions and the mere fact that there are 11 players on the team that all must play in concert – you can make all the correct decisions but they’re rendered moot if one of your defenders lines up in the neutral zone.

I probably don’t need to tell you, but that is a lot of different situations and even more outcomes.

What is possible, however, is for the NFL to use data to advise the way in which they go about things. It might not be possible to calculate what every single free agent is worth, but it might be feasible in the not-too-distant future to generate a value which at least indicates which ballpark teams should be looking at when they pay free agents without the error margins making such calculations meaningless.

Therefore, the Panthers hiring a new director of analytics probably won’t signal in a Theo Epstein-like rise to glory, but carefully and intelligently including data-driven arguments in decision making can be hugely beneficial to both the Panthers’ coaching and front office – it can aid in game prep, situational management, scouting, contract negotiations and roster composition.

“From Day One, Marty [Hurney] has included me in conversations,” said Rajack on Panthers.com. “He’s had me sit in on meetings, he’s invited me to attend pro scouting meetings that are going to happen.”

“It’s just been entire openness.”

The evidence from almost every other sport around the world is that this is a wave that is coming – this hire gives the Panthers a real chance to ride that wave rather than getting crushed beneath it.